C reative

Particle

Higgs

CPH Theory is based on Generalized light velocity from energy into mass.

CPH Theory in Journals

|

Unnatural selection: Robots start to evolve

|

|

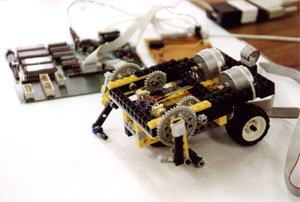

In this early version, wheels were used to steady the robot as it evolved efficient walking gaits (Image: Robert Gordon University) LIVING creatures took millions of years to evolve from amphibians to four-legged mammals - with larger, more complex brains to match. Now an evolving robot has performed a similar trick in hours, thanks to a software "brain" that automatically grows in size and complexity as its physical body develops. Existing robots cannot usually cope with physical changes - the addition of a sensor or new type of limb, say - without a complete redesign of their control software, which can be time-consuming and expensive. So artificial intelligence engineer Christopher MacLeod and his colleagues at the Robert Gordon University in Aberdeen, UK, created a robot that adapts to such changes by mimicking biological evolution. "If we want to make really complex humanoid robots with ever more sensors and more complex behaviours, it is critical that they are able to grow in complexity over time - just like biological creatures did," he says. As animals evolved, additions of small groups of neurons on top of existing neural structures are thought to have allowed their brain complexity to increase steadily, he says, keeping pace with the development of new limbs and senses. In the same way, Macleod's robot's brain assigns new clusters of "neurons" to adapt to new additions to its body. The robot is controlled by a neural network - software that mimics the brain's learning process. This comprises a set of interconnected processing nodes which can be trained to produce desired actions. For example, if the goal is to remain balanced and the robot receives inputs from sensors that it is tipping over, it will move its limbs in an attempt to right itself. Such actions are shaped by adjusting the importance, or weighting, of the input signals to each node. Certain combinations of these sensor inputs cause the node to fire a signal - to drive a motor, for example. If this action works, the combination is kept. If it fails, and the robot falls over, the robot will make adjustments and try something different next time. Finding the best combinations is not easy - so roboticists often use an evolutionary algorithm to "evolve" the optimal control system. The EA randomly creates large numbers of control "genomes" for the robot. These behaviour patterns are tested in training sessions, and the most successful genomes are "bred" together to create still better versions - until the best control system is arrived at. MacLeod's team took this idea a step further, however, and developed an incremental evolutionary algorithm (IEA) capable of adding new parts to its robot brain over time. The team started with a simple robot the size of a paperback book, with two rotatable pegs for legs that could be turned by motors through 180 degrees. They then gave the robot's six-neuron control system its primary command - to travel as far as possible in 1000 seconds. The software then set to work evolving the fastest form of locomotion to fulfil this task. "It fell over mostly, in a puppyish kind of way," says MacLeod. "But then it started moving forward and not falling over straight away - and then it got better and better until it could eventually hop along the bench like a mudskipper." When the IEA realises that its evolutions are no longer improving the robot's speed it freezes the neural network it has evolved, denying it the ability to evolve further. That network knows how to work the peg legs - and it will continue to do so. At this point, it is just like any other evolved robot: it would be unable to cope with the addition of knee-like joints, say, or more legs. But unlike conventional EAs, the IEA is sensitive to a sudden inability to live up to its primary command. So when the team fixed jointed legs to their robot's pegs, the software "realises" that it has to learn how to walk all over again. To do this, it automatically assigns itself fresh neurons to learn how to control its new legs.

As the IEA runs again, the leg below the "knee" is initially wobbly, but the existing peg-leg "hip" is already trained. "So it flops about, but with more purpose to it," says MacLeod. "Eventually the knee joint works and the robot evolves a salamander-like motion."

Once the primary command has been fulfilled once again, the IEA

freezes that second neural network. When two more jointed legs

are added to the rear of the robot, the software once again adds

more neurons and this time evolves a four-legged trotting

motion, and so on The robot can also adapt to newly acquired vision, and learn how to avoid or seek light when given a camera. "This is just like the way the brain evolved, building up in layers," Macleod says (Engineering Applications of Artificial Intelligence (DOI: 10.1016/j.engappai.2008.11.002).

Kevin Warwick, head of cybernetics at the University of Reading in the UK, is far from convinced. He says just adding more neurons to the brain as things change is not enough; the entire neural structure must also adapt. "[MacLeod's] approach will result in many more neurons being needed to do the job badly, when a smaller number of neurons would have done well," he says. Macleod says the team ran tests in which the whole "brain" was able to re-evolve, but the system became too complex and simply ground to a halt. But he is now taking his idea a step further, with a simulated robot that not only evolves its own way of moving, but also decides how many legs and sensors it needs to carry out a given task most effectively. He is confident the technique will help to build more advanced robots. In particular, the software could make humanoid robots and prosthetic limbs more versatile, he says. "It can build layer-upon-layer of complexity to fulfil tasks in an open-ended way."

Source: Newscientists

1 2 3 4 5 6 7 8 9 10 Newest articles

|

|

Sub quantum space and interactions from photon to fermions and bosons |

Interesting articles

Since 1962 I doubted on Newton's laws. I did not accept the infinitive speed and I found un-vivid the laws of gravity and time.

I learned the Einstein's Relativity, thus I found some answers for my questions. But, I had another doubt of Infinitive Mass-Energy. And I wanted to know why light has stable speed?